Ethics in People Analytics and AI at Work

Best Resources Discovered Monthly

Edition #7 – December 2020

There is a severe knowledge gap. Business leaders’ and HR practitioners’ quantitative abilities are based on the descriptive or inferential statistics that we all learned. Machine learning is entirely different. To understand it and evaluate it to the level of dealing with potential risks, let alone algorithm auditing, a systematic approach and a practical methodology is needed.

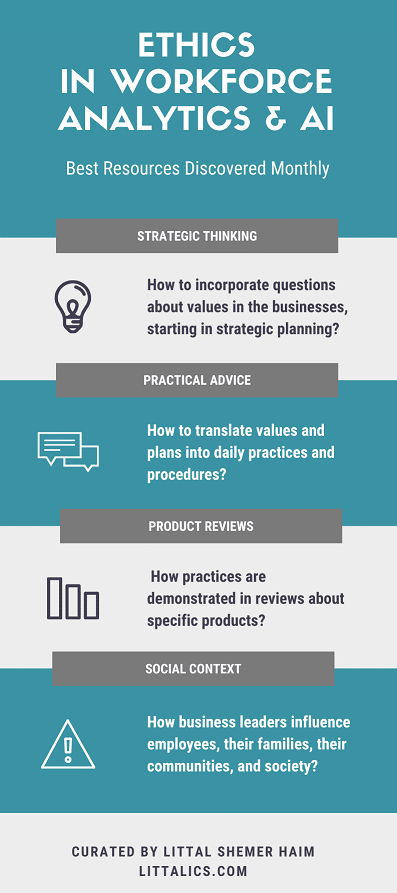

Part of my continuous learning, collaboration, and contribution, which hopefully lead to an articulation of a solution for evaluating the Ethics of workforce AI, is a comprehensive resource list that will be updated monthly. For now, I decided to include four categories in it: strategic thinking, practical advice, product reviews, and a social context.

Why these categories? I hope that such a categorization will facilitate learning in the field. Particularly, leaders need to understand how to incorporate questions about values in their businesses, starting in their strategic planning. Then, they may need a helping hand to translate those values and plans into daily practices and procedures. Those practices can be demonstrated in discussions and reviews about specific products. But at the end of the day, business leaders influence the employees, their families, their communities, and society. Therefore, this resource list must include a social perspective too.

Workforce AI Ethics in strategic thinking

Navigate the road to Responsible AI

Ben Lorica

The practice of Responsible AI encompasses more than just privacy and security. It also includes concerns around safety and reliability, fairness, transparency, and accountability. The breadth and depth of domain knowledge required to address those disparate areas mean that deploying AI ethically and responsibly will involve cross-functional team collaboration, new tools and processes, and proper support from key stakeholders.

The recent regulatory changes (required in GDPR and CCPA) prioritized privacy, security, and transparency principles. However, a shift in Responsible AI priorities is reflected in surveys. Results confirmed that security and transparency were indeed the top two principles executives intend to address, but many indicate that fairness—or testing for bias—has become a top priority. To develop tools around these ethical principles, stakeholders will need to agree on precise definitions of each. Organizations need to establish a clear understanding of the limitations of the tools they are using. They need to learn how to match models and techniques to their specific problems and challenges.

Organizations are still reactive in regards to AI. They use revenue-generating measurements without adequately addressing ethical issues. Effective Responsible AI should integrate and implement the principles as early in the product development process as possible. The inclusion of Responsible AI principles should also be routine, and part of the production culture. One of the main challenges is that current measuring business success methods don’t translate to measuring of Responsible AI successful implementations. Key performance indicators (KPIs) for business are very different from academic benchmarks, and traditional quantitative business metrics aren’t designed to encompass the qualitative aspects of Responsible AI principles.

#AI #ethics involve cross-functional team collaboration, new tools, and processes, and proper support from key stakeholders. Current methods of measuring #business #success don’t translate to measuring the success of Responsible AI implementations. https://t.co/qZhyhC895b

— Littal Shemer Haim (@Littalics) December 29, 2020

Workforce AI Ethics in practical advice

Design of hiring algorithms can double diversity in firms

Danielle Li

Automated approaches codify existing human biases to the detriment of candidates from underrepresented groups. Hiring algorithms use the information on employees to predict which job applicants they should select. In many cases, relying on such algorithms that predict future success based on past success leads to favor applicants from groups that have traditionally been successful.

Instead of designing algorithms that view hiring as a static prediction problem, researchers suggest designing algorithms that consider the challenge of finding the best job applicants as a continual learning process. In a recent study, the authors developed and evaluated hiring algorithms designed to explicitly value exploration to learn about people who might not have been previously considered for jobs. The algorithm incorporated exploration bonuses that increase its degree of uncertainty about quality in the case of underrepresented candidates. For example, such cases could be applicants with unusual majors, applicants who attended less common colleges, applicants with different types of work histories, and applicants who are demographically underrepresented at the firm.

Research reveals significant differences in the candidates selected by the exploratory versus static algorithms, i.e., a higher share of selected applicants among minorities. The overall findings are clear: “When you incorporate exploration into the algorithm, you improve the quality of talent and hire more diverse candidates. Firms that continue to use static approaches in their algorithms risk missing out on quality applicants from different backgrounds.”

"When you incorporate exploration into the #algorithm you improve the quality of #talent and #hire more diverse candidates. Firms that continue to use static approaches in their algorithms risk missing out on quality applicants from different backgrounds." https://t.co/RFydyiT6KN

— Littal Shemer Haim (@Littalics) December 6, 2020

Workforce AI Ethics in product reviews

World first for ethical AI and workplace equity

University of Technology Sydney

A workforce intelligence platform partnered with the University of Technology Sydney to deliver non-biased talent shortlisting algorithm validation. The project was a pioneering independent validation of Ethical AI. The research team has developed, tested, and iterated the ground-breaking assessment process before its use by industry partners to confirm that the AI outputs are fit for purpose and deliver actionable results.

Workforce AI deals with sensitive information about real people, so building trust in that process is critical. AI for good needs to be the standard. However, there has been no way to assess that properly. AI is not immune to bias in the data or the algorithms. Previously, the decision making has been hidden in a black box, and there has been no clear, defensible, independent, and objective validation demonstrating ethical AI. There are over 200 AI ethics frameworks and guidelines globally, few have been operationalized, and this project is a milestone in bringing audited certification to an innovative AI product independently.

Reejig uses big data and verified AI to help organizations understand and analyze their talent ecosystem skills and capabilities. It connects existing HR systems, cleanses and aggregates talent data, and unifies data across the enterprise. This, coupled with market, industry, and competitor intelligence and skills mapping, helps companies design their workforce of the future. The platform is automatically matching potential candidates or employees to opportunities to remove negative unconscious bias from the process and assist the HR users in explaining why talent has been recommended to ensure it complies with Equal Opportunities and employment law.

A #workforce intelligence platform partnered with the University of Technology Sydney to deliver the non-biased talent shortlisting #algorithm #validation project, a pioneering independent validation of #Ethical #AI. https://t.co/gFAGyYlA2t

— Littal Shemer Haim (@Littalics) January 1, 2021

Workforce AI Ethics in a social context

Europe is adopting stricter rules on surveillance tech

Patrick Howell O’Neill

The European Union will stricter rules on cyber-surveillance technologies like facial recognition and spyware. The new regulation requires companies to get a government license to sell technology with military applications. The main achievement is more transparency.

Governments must either disclose the destination, items, value, and licensing decisions for cyber-surveillance exports or make public the decision not to disclose those details. The regulation also includes guidance to “consider the risk of use in connection with internal repression or the commission of serious violations of international human rights and international humanitarian law.” The regulation’s effectiveness will depend on Europe’s national governments, which will be responsible for much of the implementation.

The new regulation mentions some specific surveillance tools, but it’s written to be more flexible and expansive. Still, how the rules are actually applied remains to be seen. Another obvious weakness of the new regulation is that it only covers EU member states. There’s an aim to create a global coalition of democracies willing to control the export of surveillance technologies more tightly. The reform makes sense. However, this regulation is only the beginning.

Europe is adopting stricter rules on #surveillance #tech.

— Littal Shemer Haim (@Littalics) December 6, 2020

The goal is to make sales of technologies like #spyware and #facialrecognition more transparent in Europe first, and then worldwide. https://t.co/0a3Y1keJQ0

Previous Editions

Edition #6 – November 2020

Edition #5 – September 2020

Edition #4 – September 2020

Edition #3 – August 2020

Edition #2 – July 2020

Edition #1 – June 2020

Edition #6 – November 2020

Workforce AI Ethics in strategic thinking

Ethical Frameworks for AI Aren’t Enough

As organizations embrace AI with increasing speed, adopting ethical principles is widely viewed as one of the best ways to ensure AI does not cause unintended harm. However, ethical frameworks cannot be clearly implemented in practice, as there’s not much technical personnel that can offer high-level guidance.

It means that AI ethics frameworks remain good marketing campaigns, more than preventing AI from causing harm. To ensure these frameworks are developed and implemented, every AI ethics principle that an organization adopts should have clear metrics.

There is no one-size-fits-all approach to quantifying potential harms created by AI. Therefore, metrics for ethical AI vary across organizations, use cases, and regulatory jurisdictions. Yet, each can be drawn from a combination of existing research, legal precedents, and technical best practices. The article offers some resources, methods, and examples of metrics for fairness, privacy. Indeed, organizations don’t need to start from scratch, but they do need to measure AI’s potential harms before they occur.

Organizations adopt high-level principles to ensure that their #AI is #ethical and causes no harm. But to give the principles teeth, organizations need concrete #metrics. There is no single approach that fits all industries, but #HRTech should have one. https://t.co/KUkCB6xHEM

— Littal Shemer Haim (@Littalics) November 15, 2020

Workforce AI Ethics in practical advice

How to Monitor Remote Workers — Ethically

Ben Laker, Will Godley, Charmi Patel, and David Cobb

Long-term remote work has necessitated questions about monitoring employee productivity. Is it possible to practice ethical surveillance? While 88% of organizations worldwide now either encourage or require their employees to work from home, resulting in productivity improvements across 77% of the workforce, there is an alarming surge in monitoring employee activity.

Thousands of companies started panic-buying surveillance software, take webcam pictures of their employees, and monitor their screenshots, login times, and keystrokes, disclosed and legally. Workers’ concerns about privacy and security are not the only issue. Surveillance tools may reduce productivity for those who don’t feel trusted and may find creative ways to evade anti-surveillance software.

Recent research reveals some answers for ethical employee monitoring. It identifies five fundamental steps that companies should take: Accept that remote work is here to stay; Engage the workforce to reach agreement on which business activities actually require monitoring and ensure that the benefits of doing so are understood; Ensure that sufficient safeguards are introduced to prevent abuse; Be aware that discrimination can occur despite precautions put in place; Rebuild the trust levels that existed in office settings. The authors also advise setting goals and communicating expected outcomes, offering employees greater autonomy, collaborating tools, and channels to share presences.

5 steps for #ethical #remotework #monitoring: remote work is here to stay, reach agreement on which business activities actually require monitoring, introduce safeguards to prevent abuse, discrimination can occur, rebuild the trust levels. https://t.co/fbRC9xyxvA

— Littal Shemer Haim (@Littalics) November 15, 2020

Workforce AI Ethics in product reviews

This company embeds microchips in its employees, and they love it

Rachel Metz

This article explores the story of employees who volunteered to have a chip injected into their hands. The chip enables them to initiate activities by a hand wave, e.g., get into the office, log on to computers, and buy drinks in the company cafeteria.

The chips are about the size of a very large grain of rice. They don’t have batteries and instead get their power from an RFID (Radio Frequency Identification) reader when it requests data from the chip. User testimonials indicate that people get used to the chip as part of their routine, and most don’t want to remove it. Usage frequencies may reach 10-15 times a day.

Only some of the information stored on the chip is encrypted. Therefore, privacy and security of the data stored on the chips are obviously a concern regarding personal behavior and other use cases of employee behavior, e.g., monitoring hand washes of medical personnel. There’s also an issue or chance that the technology inside the employees’ bodies will become outdated. There’s a need for some upgrade program.

“The chips employees got are about the size of a very large grain of rice. They’re intended to make it a little easier to do things like get into the office, log on to computers, and buy food and drinks in the company cafeteria.” https://t.co/rbDAWBSmnr

— Littal Shemer Haim (@Littalics) November 7, 2020

Workforce AI Ethics in a social context

The true dangers of AI are closer than we think

Karen Hao

AI is now screening job candidates, diagnosing disease, and identifying criminal suspects. But instead of making these decisions more efficient or fair, it’s often perpetuating the humans’ biases on whose decisions it was trained. Some AI ethical challenges and solutions were reviewed in an interview with William Isaac, who cochairs the Fairness, Accountability, and Transparency conference—the premier annual gathering of AI experts, social scientists, and lawyers working in this area.

According to Isaac, there are three challenges. First, there is a question about value alignment: how to design a system that can understand and implement various preferences and values of a population? Secondly, there are still a few empirical evidence that validates that AI technologies will achieve broad-based social benefit. Lastly, the biggest question is, what are the robust mechanisms of oversight and accountability. To overcome these risks, three are three areas. First, building a collective muscle for responsible innovation and oversight ensures all groups are engaged in the process of technological design. Secondly, accelerating the development of the sociotechnical tools actually to do this work. The last area is providing more funding and training for researchers and practitioners to conduct this work.

“The challenge with facial recognition is we had to adjudicate these #ethical and values questions while we were publicly deploying the @technology. In the future, I hope that some of these conversations happen before the potential harms emerge.” https://t.co/JmtfHOzYhx

— Littal Shemer Haim (@Littalics) November 22, 2020

Edition #5 – October 2020

Workforce AI Ethics in strategic thinking

Artificial Intelligence (AI) Ethics: Ethics of AI and Ethical AI

Keng Siau

Artificial Intelligence-based technology has many achievements, such as facial recognition, medical diagnosis, and self-driving cars. AI promises enormous benefits for economic growth, social development, human well-being, and safety improvement. However, the low-level of explainability, data biases, data security, data privacy, and ethical problems of AI-based technology pose significant risks for users, developers, humanity, and societies.

Addressing the ethical and moral challenges associated with AI is critical as AI advances. However, AI Ethics, i.e., the field related to the study of ethical issues in AI, is still in its infancy stage. To address AI Ethics, the author distinguish between the Ethics of AI and how to build Ethical AI.

Ethics of AI studies the ethical principles, rules, guidelines, policies, and regulations related to AI. Ethical AI is an AI that performs and behaves ethically. The potential ethical and moral issues that AI may cause must be recognized and understood to formulate the necessary ethical principles, rules, guidelines, policies, and regulations for AI, i.e., Ethics of AI. With the appropriate Ethics of AI, AI that exhibits ethical behavior, i.e., Ethical AI, can be built.

What is the difference between the #Ethics of #AI and Ethical AI?https://t.co/svgoHtIMZ7

— Littal Shemer Haim (@Littalics) November 4, 2020

Workforce AI Ethics in practical advice

A Practical Guide to Building Ethical AI

This Practical Guide to Building Ethical AI points to reasons for failure in standard approaches to AI Ethical risk mitigation, such as the academic approach, an on-the-ground approach, and embracing only high-level AI ethics principles. It offers seven steps towards building a customized, operationalized, scalable, and sustainable data and AI ethics program.

Until recently, the discussions of AI Ethics were reserved for nonprofit organizations and academics. Today the biggest tech companies are putting together fast-growing teams to tackle the ethical problems that arise from the widespread collection, analysis, and use of massive troves of data, mainly when that data is used to train machine learning models. Failing to operationalize AI Ethics is a threat to every company’s bottom line due to reputation, regulation, and legal risks. It might also lead to wasted resources, inefficiencies in product development and deployment, and even an inability to use data to train AI models at all.

When handling AI Ethics through ad-hoc discussions on a per-product basis, with no clear protocol in place to identify, evaluate, and mitigate the risks, companies end up overlooking risks. AI ethics programs must be tailored to the business and the relevant regulatory needs. However, there are recommended steps towards building a customized, operationalized, scalable, and sustainable AI Ethics program: 1. Identify existing infrastructure that a data and AI ethics program can leverage; 2. Create data and AI ethical risk framework that is tailored to your industry; 3. Change how you think about ethics by taking cues from the successes in health care; 4. Optimize guidance and tools for product managers; 5. Build organizational awareness; 6. Formally and informally incentivize employees to play a role in identifying AI ethical risks; 7. Monitor impacts and engage stakeholders.

This practical guide to #AI #Ethics points to reasons for failure in standard approaches and offers seven steps towards building a customized, operationalized, scalable, and sustainable data and AI ethics program. A new entry to my monthly resource report. https://t.co/PPALQ67O3y

— Littal Shemer Haim (@Littalics) October 27, 2020

Workforce AI Ethics in product reviews

Tech Is Transforming People Analytics. Is That a Good Thing?

Tomas Chamorro-Premuzic and Ian Bailie

The volume of data available to understand and predict employees’ behaviors will continue to grow exponentially, enabling more opportunities for managing through tech and data. However, this article questions the good consequences of advanced technology in People Analytics. People analytics is a deliberate and systematic attempt to make organizations more evidence-based. It summarizes this domain’s technology development, including employee listening tools, technologies used to monitor safety and well-being, biometric data people willingly shared to assess Covid-19 risk, performance or productivity boosters, and more.

The “creepy” monitoring factor starts to kick in, as phones, sensors, wearables, and IoT detect and record our moves. When such tools become mandatory, employees may worry about their privacy and the usage of their data for purposes other than Covid19 protection. HR departments must lead the conversation that addresses employee trust, corporate responsibilities, and new technology’s ethical implications. Organizations need to tackle the ethics and privacy topic and be open and transparent to build and maintain employee trust in the use of their data.

Business leaders must ensure no logical tension between what is good for the employer and what is good for the employee. But the warning in the article is clear: The temptation to force people into certain behaviors, or to use their data against them, is more real than one would think.

“Be sure there is no logical tension between what is good for the #employer, and what is good for the #employee. But the temptation to force #people into certain behaviors, or to use their #personal #data against them, is more real than one would think. https://t.co/C8EauYkb4H

— Littal Shemer Haim (@Littalics) November 4, 2020

Workforce AI Ethics in a social context

AI ethics groups are repeating one of society’s classic mistakes

Abhishek Gupta and Victoria Heath

The global AI ethics efforts aim to help everyone benefit from this technology and prevent it from harming. International organizations are racing to develop global guidelines for the ethical use of AI. However, these efforts will be futile if they fail to account for the cultural and regional contexts in which AI operates. Without more geographic representation, they will produce a global vision for AI ethics that reflects people’s perspectives in only a few regions of the world, particularly North America and northwestern Europe.

“Fairness,” “privacy,” and “bias” mean different things in different places. People also have different expectations of these concepts depending on their own political, social, and economic realities. If organizations working on global AI ethics fail to acknowledge this, they risk developing standards that are, at best, meaningless and ineffective across all the world’s regions. At worst, these flawed standards will lead to AI tools that preserve existing biases and are insensitive to local cultures.

To prevent such abuses, companies working on ethical guidelines for AI-powered systems need to engage users from around the world. They must also be aware of how their policies apply in different contexts. Unfortunately, the entire field of AI ethics is still at risk of limiting itself to languages, ideas, theories, and challenges from many regions. Nevertheless, the article enumerates some encouraging attempts to change this situation.

The global #AI #ethics efforts aim to help everyone benefit from this technology and to prevent it from causing harm. However, these efforts will be futile if they fail to account for the cultural and regional contexts in which AI operates. https://t.co/uxoin2SO1n

— Littal Shemer Haim (@Littalics) October 27, 2020

Edition #4 – September 2020

Workforce AI Ethics in strategic thinking

The Ethics of AI Ethics: An Evaluation of Guidelines

Thilo Hagendorff

The advanced application of AI in many fields raises discussion on AI ethics. Some ethics guidelines are already published. Although overlapping, they are not identical. So, how can one evaluate ethics guidelines? This article compares 22 approaches. Its analysis provides a detailed overview of AI ethics and examines the implementation of ethical principles in AI systems.

Unfortunately, according to this article, AI ethics is currently failing: Ethics lacks a reinforcement mechanism, and so, deviations from various codes of ethics have no consequences. Integrated Ethics into institutions serves mainly as a marketing strategy. Reading ethics guidelines has no significant influence on software developers’ decision-making, who lack a feeling of accountability or a view of the moral significance of their work. Furthermore, economic incentives are easily overriding commitment to ethical principles and values.

In several areas, ethically motivated efforts are undertaken to improve AI systems, particularly in fields where specific problems can be technically fixed: privacy protection, anti-discrimination, safety, or explainability. However, some significant ethical aspects that I find relevant to Workforce AI are yet omitted from guidelines. These are a lack of diversity in the AI community, the weighting between algorithmic or human decision routines, “hidden” social costs of AI, and the problem of the public–private-partnerships and industry-funded research.

In order to close the gap between ethics and technical discourses, a stronger focus on technical details of AI and ML is required. But at the same time, AI ethics should focus on genuinely social aspects, uncover blind spots in knowledge, and strive for individual self-responsibility.

The Ethics of #AI #Ethics: An Evaluation of Guidelines https://t.co/j2HlsYIoSH 1st entry to September edition of my monthly review on #Workforce AI and #PeopleAnalytics Ethics. A comparison of 22 approaches and an examination of ethical principles implementation in AI systems.

— Littal Shemer Haim (@Littalics) September 30, 2020

Workforce AI Ethics in practical advice

Career Planning? Consider These HR Technology Roles of the Future

Artificial intelligence technologies and other automation solutions are disrupting the HR profession. A crucial part of HR response is to consider new responsibilities within their roles. It is not surprising to find this topic in HR-related content. However, it is encouraging to see that this sector feels AI Ethics as a part of its future domain. While general predictions about future roles are not necessarily useful, experts’ discussion about AI Ethics offers practical points that can serve us today.

Although the AI Ethics Officer is mentioned as a future role, its description shed some light on present necessities. As new technologies are adopted by HR and generate unprecedented amounts of data about employees and candidates, the data must be carefully assessed, used, and protected. Furthermore, since decisions to deploy AI and ML are often made in departments other than HR, HR leaders must have a voice in ensuring AI-generated talent data is used ethically, so potential bias is prevented.

What does this mean for HR practitioners in organizations today? First, it is time to establish new practices in collaboration with the legal team to ensure the algorithms’ results are transparent, explainable, and bias-free. Moreover, it is time to start considering the balance between stakeholders in the organization. The HR department should ask how technologies serve both employers and employees and not settle only in discussing what technologies they should be using.

(Thanks for sharing, Vijay Bankar)

“New technologies being adopted by #HR are generating unprecedented amounts of #data… Decisions to deploy #AI and #ML often are made in departments other than HR. It is essential that HR leaders have a voice in ensuring AI-generated talent data is used ethically.” https://t.co/l7hhPvdgZp

— Littal Shemer Haim (@Littalics) September 22, 2020

Workforce AI Ethics in product reviews

Google Offers to Help Others With the Tricky Ethics of AI

Tom Simonite

This entry is not related solely to Workforce AI. However, since all tech giants are players in the HR-Tech industry this way or another, I find this article thought-provoking. Today organizations receive cloud computing solutions from vendors like Amazon, Microsoft, and Google. Will they outsource the domain of AI Ethics to those vendors too? It turns out that Google’s cloud division will soon invite customers to do so.

Google AI ethics services, which the company plans to launch before the end of the year, will include spotting racial bias in computer vision systems and developing ethical guidelines that govern AI projects. In the long run, it may offer AI auditing for ethical integrity and ethics advice. Will we see a new business category called EaaS, i.e., ethics as a service? And if so, would it be right to consider companies such as Google to suppliers of such services?

On the one hand, Google has learned some AI ethics lessons the hard way, e.g., accidentally labeling black people as gorillas, which is the tip of the iceberg when considering how facial recognition systems are often less accurate for black people. Therefore, Google can leverage its experience and power to promote AI Ethics. But on the other hand, a company seeking to make money from AI may not be the best moral mentor on restraining technology. The inherent conflict of interest is relatively straightforward. Nevertheless, it is worthwhile to stay tuned for Google’s training courses on the topic.

Google will offer services of ethical #AI guidelines. #Ethics is crucial, as tech giants’ activities reveal. However, if they make money from AI, should they be the ones to educate businesses? I wonder, from an ethical perspective… ???? https://t.co/YAiut7nKT0

— Littal Shemer Haim (@Littalics) September 10, 2020

Workforce AI Ethics in a social context

Employers are tracking us. Let’s track them back

Johanna Kinnock

Employee surveillance grows, and most employers are tracking their workers in one way or another. Research firm Gartner says half of the companies were already using “non-traditional” listening techniques like email scraping and workspace tracking in 2018. They estimate the figure to have risen to around 80% by now. Should employees worry? Should they respond to protect themselves? Workplace data expert Christina Colclough thinks they should. Colclough has created an app, WeClock, that enables employees to track their data and share it with unions.

Employees and their unions need to push back to ensure that their whole online existence doesn’t become their employers’ property. Data from employee surveillance is used to boost productivity, gain competitive advantage, and grow profits, but it cements the position of power that employers have over employees. Regulation for individual rights to data does not offer sufficient remedy yet. Decisions about employees and candidates present or take away certain opportunities based on past actions. Algorithms may not show certain job offers or career opportunities. There is a vast gap between what companies know about employees and what employees know about themselves.

Digitization doesn’t necessarily mean that only employers should have control and access over employee data. The app WeClock enables employees to track, and share with their unions, things like how far they must travel to work, whether they’re taking their allotted breaks, and how long they spend working out of hours. This will provide a source of aggregate data about critical issues affecting employee wellbeing.

#GDPR represented a huge step for individual rights to #data. Potential risks related to #workplace #surveillance mean it needs its own specific set of prohibitions. Amendments that would have given #workers greater rights over their data were not adopted. https://t.co/y3Eh7DaT5l

— Littal Shemer Haim (@Littalics) September 14, 2020

Edition #3 – August 2020

Workforce AI Ethics in strategic thinking

Questions about your AI Ethics

Do words like bias, privacy, liability, design, and management are raised in strategic discussions in your organization? And if so, are such words followed by an exclamation mark or a question mark? I consider this article as strategic, not merely because it covers 24 ethical questions that you should think about when implementing AI, but because it is actually an infinite list of questions. Each question you raise may bring more questions instead of answers. As AI technology evolves and penetration rates in organizations sharply increase, this list will probably demonstrate some of our routine discussions.

Some questions I find most important are: What are the limits of our intrusion into worker’s behavior and sentiments? What rights do employees have on information about themselves? How do we treat our workers who are not employees (gig workers, temps, subcontractors)? Is our machine-led learning system actually developing our organization in the direction we want? How, exactly, do you tell if the machine is producing the results you actually want and need? But read through the entire list, and add your own.

The ethics of AI is more than a committee that produces hard rules. The implementation is not only technical but rather an obligation to have a clear sense of what the organization’s ethics are. It may bring many new questions. However, in a reality of rapidly evolving technologies, don’t be surprised that a reasonable answer may be ‘I don’t know’. Simply follow it with ‘How do we find out?’

#Ethics of #Workforce #AI: My monthly review opens with https://t.co/UZMeZcP9wn by @JohnSumser AI implementation is not only technical but rather an obligation to have a clear sense of the organization’s ethics. It may bring new questions. A reasonable answer may be: I don’t know

— Littal Shemer Haim (@Littalics) August 31, 2020

Workforce AI Ethics in practical advice

INSIGHT: Hiring Tests Need Revamp to End Legal Bias

Ron Edwards

Do artificial intelligence push recruitment practices toward less fairness? Pending legislation in New York City and California may suggest it does. Is it a first step in ending legal hiring bias? This call to update legislation in the US, specifically, revamp hiring tests to end legal bias is an eye-opening perspective to all prospects and clients of AI solutions. Although targeted to government institutions, its argument can be considered as advice to everyone in this field. Don’t wait for regulation to critic what vendors put on the shelves.

The article describes how hiring tools can negatively impact women, people of color, and those with disabilities. e.g., analyzing facial expressions using AI software, or collecting information unrelated to a job in question. Employers use cognitive ability assessments that enable significantly more white candidates to pass, in comparison to minorities. A high-profile failure is also mentioned: Amazon built an AI hiring tool that filtered out women’s resumes for engineering positions.

For workforce diversity to improve, 20th-century laws should be updated in accordance with 21st-century technologies. California and New York City are considering legislation that would set standards for AI assessments in hiring. Its requirements include pre-testing for bias, annual auditing to ensure no adverse impact on demographic groups, and candidates’ notification about the characteristics assessed by AI tools — a positive direction that organizations should embrace even before the long processes of legislation end because all candidates deserve equal chance to get hired, promoted, and be rewarded consistent with their talents.

(Thank you Jouko van Aggelen for sharing)

CA and NY are considering legislation that would set standards for #AI assessments in #hiring. Its requirements include pre-testing for #bias, annual #auditing to ensure no adverse impact on demographic groups, and candidates’ notification https://t.co/SlxCA88oWt

— Littal Shemer Haim (@Littalics) August 31, 2020

Workforce AI Ethics in product reviews

Why using technology to spy on home-working employees may be a bad idea

Gabriel Burdin, Simon D. Halliday, and Fabio Landini

I’ve already offered in this section dystopian descriptions of employee surveillance while working from home. Some remote employees are photographed along with their desktop screenshots every few minutes. Others are tracked while browsing the web, make online calls, post on social media, and send private messages. The purpose of such surveillance solutions is to provide employees incentives to maintain their productivity, or in other words, prevent them from slacking off or shirking on working hours. However, psychological experiments reveal that instead of boosting or maintaining productivity, the variety of surveillance solutions might lead to the opposite consequence.

Research findings show that using technology to spy on home-working employees may be a bad idea after all. The standard economic theory would predict that intensive online workplace surveillance is effective since employees are motivated purely by self-interest and care only about their material payoffs. However, empirical evidence suggests that people have more complex motives. Alongside material payoffs, people value autonomy and dislike external control. They are also motivated by reciprocity and their beliefs about others’ intentions. Employees reward trusting employers who avoid control with their own efforts. Employers may trigger employees’ positive reciprocity and support their productivity simply by desist greater control.

Interestingly, the debate about remote workforce surveillance, which I included in previous editions of this monthly review, was focused mainly on employee privacy and the blurred boundaries between work and non-work. These perspectives, as much as important, are not comprehensive enough to understand the employment relations and conflicts. While employers would like to boost productivity for profit, surveillance technologies that monitor work from home might be the wrong solution, because it signals distrust and reduces intrinsic motivation to perform well. Ignoring the potential reactions to surveillance solutions may undermine the goal of increased productivity, let alone harming employees’ dignity.

Research findings show that using #technology to #spy on home-working #employees may be a bad idea after all. People value #autonomy and dislike external #control. They are also motivated by reciprocity and their beliefs about others’ intentions. https://t.co/WhqitFAn3g

— Littal Shemer Haim (@Littalics) August 31, 2020

Workforce AI Ethics in a social context

21 HR Jobs of the Future

Jeanne C. Meister, Robert H. Brown

Some writers perceive the Covid19 times as a tremendous opportunity for the HR sector to lead organizations in navigating the future. But a more realistic perspective would emphasize that in this turbulent time even the best intentions to support the people and guiding them to acquiring new skillset and embracing new career paths won’t help if the business crush due to covid19. In other words, it’s not just the employees who need to overcome, the organizations which employ them need to survive the crisis. However, I do witness a mindset shift in the HR sector, which in my opinion represents a continuous development, that covid19 may accelerate but certainly did not create. For that reason, I was happy to read about research that demonstrated such a shift, and creatively described 21 HR jobs of the future.

Nearly 100 CHROs, CLOs, and VP’s of talent and workforce transformation participated in brainstorming and considered economic, political, demographic, societal, cultural, business, and technology trend to envision how HR’s role might evolve over the next 10 years. The hypothetic future HR roles they created represent a growing understanding of crucial issues such as individual and organizational resilience, organizational trust and safety, creativity and innovation, data literacy, and human-machine partnerships. Those issues and the roles derived are not necessarily in the HR domain. However, the perceptions of HR leaders represent pivoting in the organizational state of mind.

As questions start being raised around the potential for bias, inaccuracy, and lack of transparency in workforce AI solutions, more senior HR leaders understand the need for systematically ensuring fairness, explainability, and accountability. The writers believe this could lead to HR roles such as the Human Bias Officer, responsible for helping mitigate bias across all business functions. I believe it’s an encouraging direction in organizations’ agendas toward responsibility in the broad social context. And so, I’m happy to end this monthly edition with such a positive perspective.

Remember kids, when recruiting machines test you remotely, monitor your responses, record your biometrics (voice, face), or when your employer monitors your stress and anxiety by your interaction with mobile devices, turn to the genetic diversity officer if you feel discriminated https://t.co/1WVRvV8Ykx

— Littal Shemer Haim (@Littalics) August 13, 2020

Edition #2 – July 2020

Workforce AI Ethics in strategic thinking

Ethical principles in machine learning and artificial intelligence: cases from the field and possible ways forward

Samuele Lo Piano

More and more decisions related to the people aspects of the business are being based on machine-learning algorithms. Ethical questions are raised from time to time, e.g., when “black box” algorithms create controversial outcomes. However, until writing these lines, I have not found a single standard or framework that guides the HR-Tech industry beyond regional regulations.

By the time such a standard established, any practitioner who deals with the subject needs a thorough review of literature that leads to available tools and documentation. This Nature’s article offers the solutions. Although it addresses ethical questions related to risk assessments in criminal justice systems and autonomous vehicles, I consider reading it a strategic step towards ethical considerations in the procurement of workforce AI. Particularly, the article focuses on fairness, accuracy, accountability, and transparency, and offers guidelines and references for these issues.

The article lists research questions around the ethical principles in AI, offers guidelines and literature on the dimensions of AI ethics, and discusses actions towards the inclusion of these dimensions in the future of AI ethics. If you start the journey toward understanding the ethics of workforce AI, you should use this article as an intellectual hub for further exploration of academic and practical conversations.

(Thank you Andrew Neff for the tweet)

Follow my #Workforce #AI #Ethics monthly review of resources here https://t.co/fMW3Qex2ns This article will open today the July edition, on strategic thinking category. Other categories: practical advice, product reviews, and a social context. Subscribe! https://t.co/QmrL694jDG???? https://t.co/6ce0Ldt06f

— Littal Shemer Haim (@Littalics) July 22, 2020

Workforce AI Ethics in practical advice

23 sources of data bias for #machinelearning and #deeplearning

ajit jaokar

This list includes 23 types of bias in data for machine learning. Actually, it quotes an entire paragraph of this survey results on bias and fairness in ML. Why I put this content in the practical advice section of this monthly review? I think that although most business leaders in organizations may not be legally responsible for such biases in workforce AI, at least not directly, they do need to be aware of them, ethically. After all, AI support decision-making, but the last words are still owned by humans, who must take into account everything, including justice and fairness.

It’s good to have such a list. I advise you to come back to it from time to time, to refresh your memory and be inspired. So, what kind of biases you can find in this list? Plenty: Aggregation Bias, Population Bias, Simpson’s Paradox, Longitudinal Data Fallacy, Sampling Bias, Behavioral Bias, Content Production Bias, Linking Bias, Popularity Bias, Algorithmic Bias, User Interaction Bias, Presentation Bias, Social Bias, Emergent Bias, Self-Selection Bias, Omitted Variable Bias, Cause-Effect Bias, Funding Bias. Did you try to test yourself and count how many of these biases you already know?

Some biases listed here can be resolved by research methodology. That’s the reason I include some examples of such biases in my introductory courses. So if you are a People Analytics practitioner, don’t hesitate to re-open your old notebooks. Here’s one of my favorites, i.e., I enjoy presenting it to students: Simpson’s Paradox! It arose during the gender bias lawsuit in university admissions against UC Berkeley. Sometimes subgroups, and in this case – women, may be quite different. After analyzing graduate school admissions data, it seemed like there was a bias toward women, a smaller fraction of whom were being admitted to graduate programs compared to their male counterparts. However, when exploring admissions data separately and analyzing it across departments, findings reveal that more women actually applied to departments with lower admission rates for both genders.

A list of 23 types of #bias in #data for #MachineLearning https://t.co/Y0ffuDKM4W You may not be responsible, legally, but you should be aware of it, ethically. More thoughts about #AI #Ethics in my monthly review https://t.co/fMW3Qex2ns subscribe to receive it in your inbox ????

— Littal Shemer Haim (@Littalics) July 20, 2020

Workforce AI Ethics in product reviews

Remote working: This company is using fitness trackers and AI to monitor workers’ lockdown stress

Owen Hughes

PwC was harnessing AI and fitness-tracking wearables to gain a deeper understanding of how the work and external stressors are impacting employees’ state of mind. During the COVID-19 crisis, companies promote healthy working habits to ensure employees are provided with the support they need while working from home. What can a company offer beyond catch-ups on Zoom? PwC approach is novel, yet, to me, controversial.

The company has been running a pilot scheme that combines ML with wearable devices to understand how lifestyle habits and external factors are impacting its staff. Employees volunteered to use fitness trackers that collect biometric data and connect it to cognitive tests, to manage stress better. Factors such as sleep, exercise, and workload influence employee performance, Obviously, and balancing work and home life benefits mental health and wellbeing.

Volunteering rates were higher than expected. Understanding of human performance and human wellness is, clearly, an interest of both employees and employers. However, in my opinion, it must initiate a discussion about the boundaries of organizational monitoring. Is it OK to collect employee biometric measures, e.g., pulse rate and sleeping patterns, and combine them with cognitive tests and deeper personality traits, in the organization arena? If it does, how far is it OK to go with genetic information? How different are these answers in case the employer also offers medical insurance as a benefit to its employees? Tracking mental and physical responses to understanding work may be essential. Still, employers may provide education and tools without being directly involved in data collection and maintenance. Even when volunteered, there always a self-selection bias among employees (see the previous category in this review), and so, the beneficial results are not equally distributed.

(Thank you David Green, for the tweet)

PwC is harnessing #AI and fitness-tracking wearables to gain a deeper understanding of how #work and stressors are impacting #employees‘ state of mind. Would you like to get access to my DNA too??? Seriously, can’t people track health metrics without involving their employer? https://t.co/RkGa4x42cy

— Littal Shemer Haim (@Littalics) July 2, 2020

Workforce AI Ethics in a social context

Man is to Programmer as Woman is to Homemaker: Bias in Machine Learning

Emily Maxie

We often hear about gender inequities in the workplace. A lot of factors are at play: the persistence of traditional gender roles, unconscious bias, blatant sexism, lack of role models for girls who aspire to lead in STEM. However, technology is also to blame because machine learning has the potential to reinforce cultural biases. This article is not new, but it offers a clear explanation for the non-techies on how natural language processing programs exhibited gender stereotypes.

To understand the relationships between words, Google researchers created in 2013, a neural network algorithm which enables computers to understand human speech. To train this algorithm, they used the massive data set at their fingertips: Google News articles. The result was widely accepted and incorporated into all sorts of other software, including recommendation engines and job-search systems. However, the algorithm created troubling correlations between words. It was working correctly, but it learned the biases inherent in the text on Google News.

In order to solve the issue, researches had to identify the difference between a legitimate gender difference and a biased gender difference. They set out to determine the terms that are problematic and exclude them while leaving the unbiased terms untouched. Bias in training data can be mitigated, but only if someone recognizes that it’s there and knows how to correct it. Sadly, it would be impossible to tell if all the uses, in all kinds of software, are fixed, even if Google corrected the bias.

(Thank you Max Blumberg for highlighting this article)

“Today, it’s easier than ever to add #NLP or #FacialRecognition to products. It’s also more important than ever to remember that the products we build can project #biases of today onto the world we live in tomorrow.” Thx @Max_Blumberg for highlighting this https://t.co/4t048CG4S8

— Littal Shemer Haim (@Littalics) July 21, 2020

Edition #1 – June 2020

Workforce AI Ethics in strategic thinking

Ethics and the future of work

Erica Volini, Jeff Schwartz, Brad Denny

The way work is done changes, as the integration between employees, alternative workforces, technology, and specifically automation, becomes more prevalent. Deloitte’s article Ethics and the future of work enumerate the increasing range of ethical challenges that managers face in result. Based on a survey, it states four factors at the top of ethical challenges related to the future of work: legal and regulatory requirements, rapid adoption of AI in the workplace, changes in workforce composition, and pressure from external stakeholders. Organizations are not ready to manage ethical challenges. Though relatively prepared to handle privacy and control of employee data, executives’ responses indicate that organizations are unprepared for automation and the use of algorithms in the workplace.

According to Deloitte, organizations should change their perspective when approaching new ethical questions, and shift from asking only “could we” to also asking “how should we.” The article demonstrates how to do so. For example, instead of asking “could we use surveillance technology?” organizations may ask “how should we enhance both productivity and employee safety?”.

Organizations can respond to ethical challenges in various ways. Some organizations create executive positions that focus on driving ethical decision-making. Other organizations use new technologies in ways that can have clear benefits for workers themselves. The point is that instead of reacting to ethical dilemmas as they arise, organizations should anticipate, plan for, and manage ethics as part of their strategy and mission, and focus on how these issues may affect different stakeholders.

Happy to read this finally! https://t.co/Z6Gqmpt7Dw “Organizations felt least ready to address ethical challenges involving the intersection of people and technology” as @DeloitteHC survey reveals. I couldn’t ask for better validation for my recent endeavor in #AI #Ethics

— Littal Shemer Haim (@Littalics) June 11, 2020

Workforce AI Ethics in practical advice

Walking the tightrope of People Analytics – Balancing value and trust

The People Analytics domain will eventually transform into AI products. In the early days, most People Analytics practices were projects or internal tools developed in organizations. As the industry matures, more and more organizations automate, starting with their HR reporting. HR-tech products and platforms that offer solutions based on predictive analytics and natural language processing are not rare anymore, although mostly seen in large organizations. However, the discussion about Ethics in HR-tech is still in its infancy. In my opinion, the conversation between the different disciplines – HR and OD, ML and AI, and Ethics – are the building blocks of the People Analytics field in the future. The article Walking the tightrope of People Analytics – Balancing value and trust is an excellent example of such a multidisciplinary conversation.

People Analytics projects might go wrong in many ways. To prevent the harmful consequences of lousy analysis, HR leaders must ask essential questions about the balance of interests between the employer and the employees, the value delivered to each party, the fairness, and transparency of the analysis and the risk of illegal or immoral application of the results. The HR sector needs an ethical framework to address these questions.

This article takes this need a step further. It defines ethics, review its three primary paradigms, i.e., deontology, consequentialism, and virtue ethics. Then it derives practical principles from each method, respectively – transparency, function, alignment. Each of these principles offers three questions that should be raised before, during, and after an analytics project. This framework goes beyond the regulation. It helps to make sure that new analytics capabilities that improve decision making are not sacrificing employee care.

(Thank you David Green, for the tweet)

Here’s an elegant and parsimonious way to transform philosophical principles into practical instructions, when you deal with #Ethics in #PeopleAnalytics. However, is it applicable to all #AI apps that #HR uses to analyze the workforce? Especially “audit trail” – Any example? Thx! https://t.co/3stDVp3y1r

— Littal Shemer Haim (@Littalics) June 14, 2020

Workforce AI Ethics in product reviews

This startup is using AI to give workers a “productivity score”

In the last few months, the covid19 pandemic caused millions of people to stop going into offices and doing their jobs from home. A controversial consequence of remote work was the emerging use of surveillance software. Many new applications enable employers now to track their employees’ activities. Some record keyboard strokes, mouse movements, websites visited, and users’ screens. Others monitor interactions between employees to identify patterns of collaboration.

The MIT technology review covered a startup that uses AI to give workers a productivity score, which enables managers to identify those who are most worth retaining and those who are not. The review raises an important question: do you owe it to your employer to be as productive as possible, above all else? Productivity was always crucial from the organizational point of view. However, in a time of the pandemic, it has additional perspectives. People must cope with multi challenges, including health, child care, and balancing work at home with personal needs. But organizations struggle too, to survive. The potential conflicts of interest, and the surveillance available now, put additional weight on that question.

When runs in the background all the time, and monitoring whatever data trail a company can provide for its employees, an algorithm can learn typical workflows of different workers. It can analyze triggers, tasks, and processes. Once it has discovered a regular pattern of employee behavior, it can calculate a productivity score, which is agnostic to the employee role, though it works best with repetitive tasks. Though contributing to productivity by identifying what could be made more efficient or automated, such algorithms also might encode hidden bias, and also might make people feel untrusted.

Enaible offers #employers tools of #surveillance on employees, but the critic points to #trust issues. As #productivity measures are automated by #AI, #ethics questions should also be raised. https://t.co/piHWLhcspb

— Littal Shemer Haim (@Littalics) June 16, 2020

Workforce AI Ethics in a social context

The Institute for Ethical AI & Machine Learning

The Institute for Ethical AI & Machine Learning is a UK-based research center that carries out technical research into processes and frameworks that support the responsible development, deployment, and operation of machine learning systems. The institute’s vision is to “minimize the risk of AI and unlock its full power through a framework that ensures the ethical and conscious development of AI projects.” My reading about this organization’s contribution is through a lens of workforce AI applications. However, this organization aims to influence all industries.

Volunteering domain experts in this institute articulated “The Responsible Machine Learning Principles” that guide technologists. There are eight principles: Human augmentation, Bias evaluation, Explainability by justification, Reproducible operations, Displacement strategy, Practical accuracy, Trust by privacy, and Security risks. Each principle includes a definition, detailed description, examples, and resources. I think every workshop for AI developers should cover these principles, and especially in the HR-Tech industry.

The Institute for Ethical AI & ML offers a valuable tool, called AI-RFX. It is a set of templates that empowers industry practitioners who oversee procurement to raise the bar for AI safety, quality, and performance. Practically, this open-source tool converts the eight principles for responsible ML into a checklist.

Last but not least on my monthly edition of #Ethics in #PeopleAnalytics and #AI at #Work – best resources, discovered monthly https://t.co/XRVHhdWIos UK-based research center that carries out technical research into processes and frameworks that support responsible #ML

— Littal Shemer Haim (@Littalics) July 1, 2020